Rethinking Zero-shot Video Classification: End-to-end Training for Realistic Applications

Biagio Brattoli Joseph Tighe Fedor Zhdanov

Pietro Perona Krzysztof Chalupka

Paper | Code

Abstract

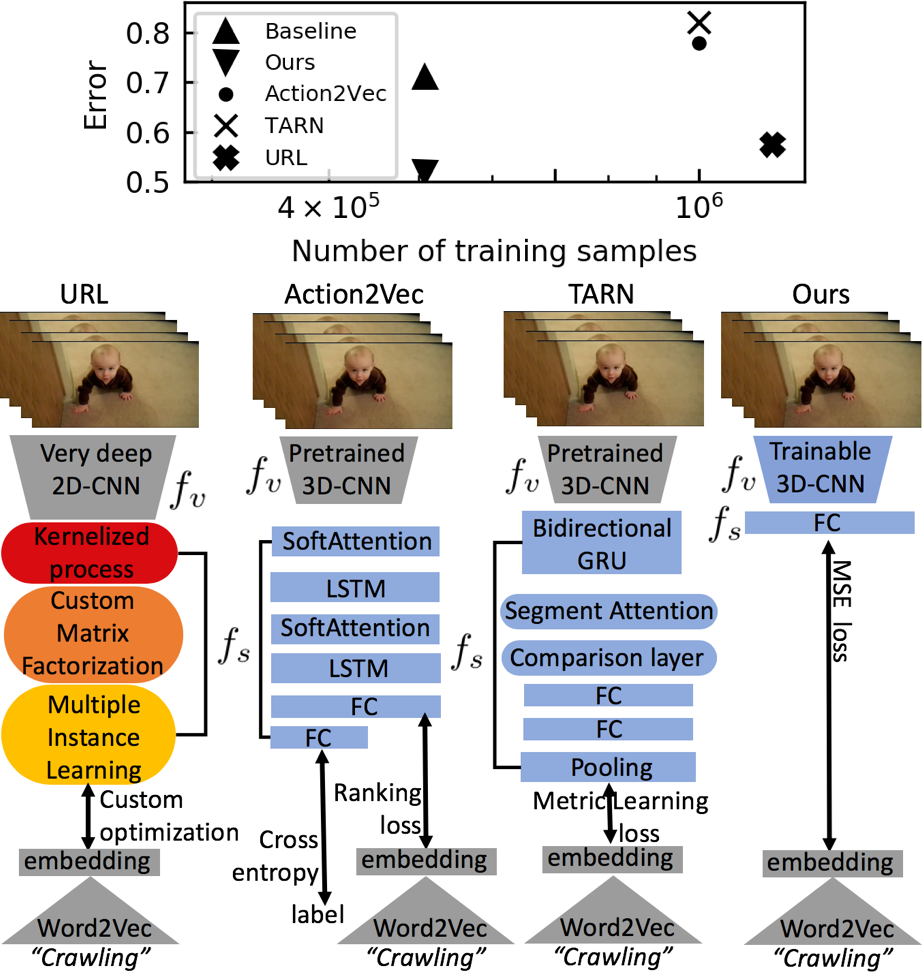

Trained on large datasets, deep learning (DL) can accurately classify videos into hundreds of diverse classes. However, video data is expensive to annotate. Zero-shot learning (ZSL) proposes one solution to this problem. ZSL trains a model once, and generalizes to new tasks whose classes are not present in the training dataset. We propose the first end-to-end algorithm for ZSL in video classification. Our training procedure builds on insights from recent video classification literature and uses a trainable 3D CNN to learn the visual features. This is in contrast to previous video ZSL methods, which use pretrained feature extractors. We also extend the current benchmarking paradigm: Previous techniques aim to make the test task unknown at training time but fall short of this goal. We encourage domain shift across training and test data and disallow tailoring a ZSL model to a specific test dataset. We outperform the state-of-the-art by a wide margin.

Paper

Arxiv, 2020.

Citation

@InProceedings{brattoli2020zsl,

title={Rethinking Zero-shot Video Classification: End-to-end Training for Realistic Applications},

author={Brattoli, Biagio and Tighe, Joseph and Zhdanov, Fedor and Perona, Pietro and Chalupka, Krzysztof},

booktitle={IEEE conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020}

}